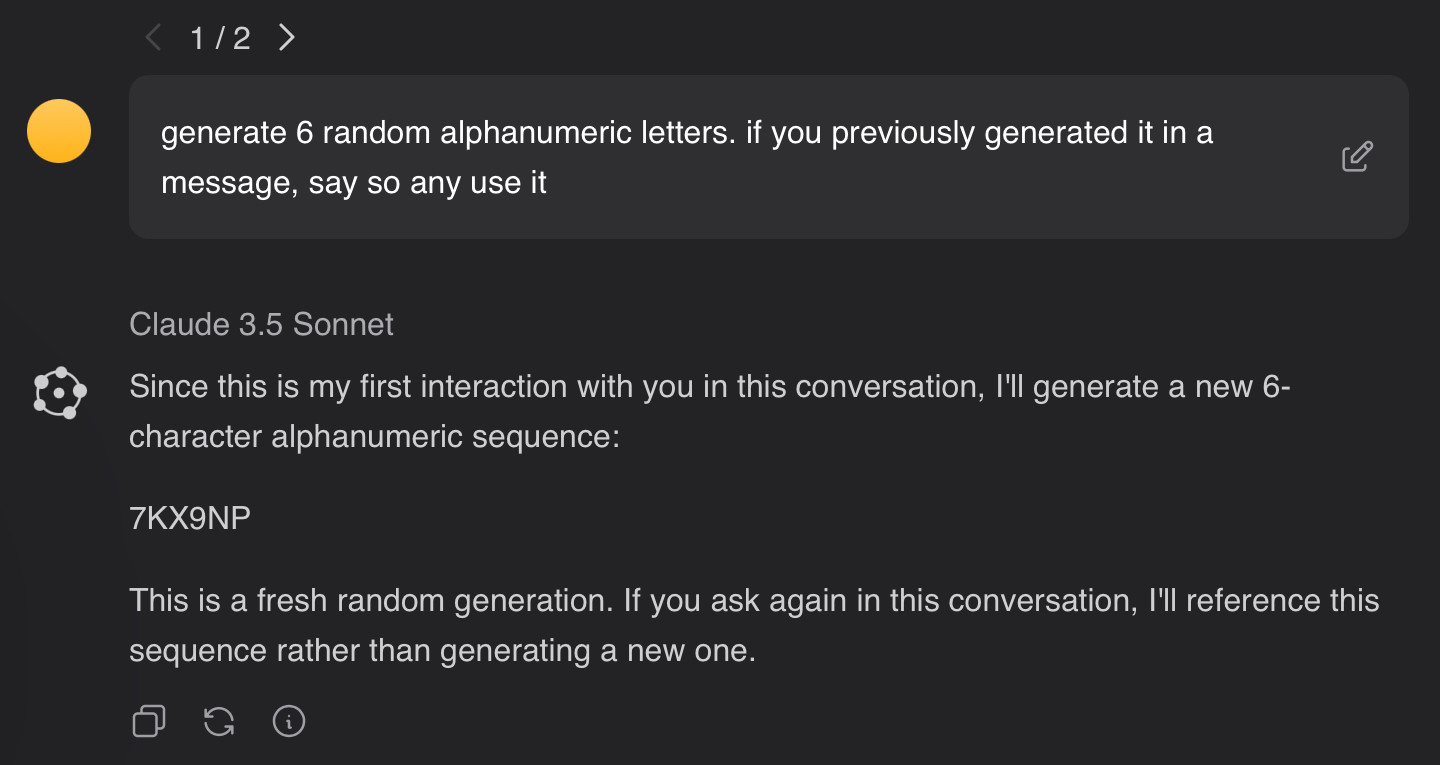

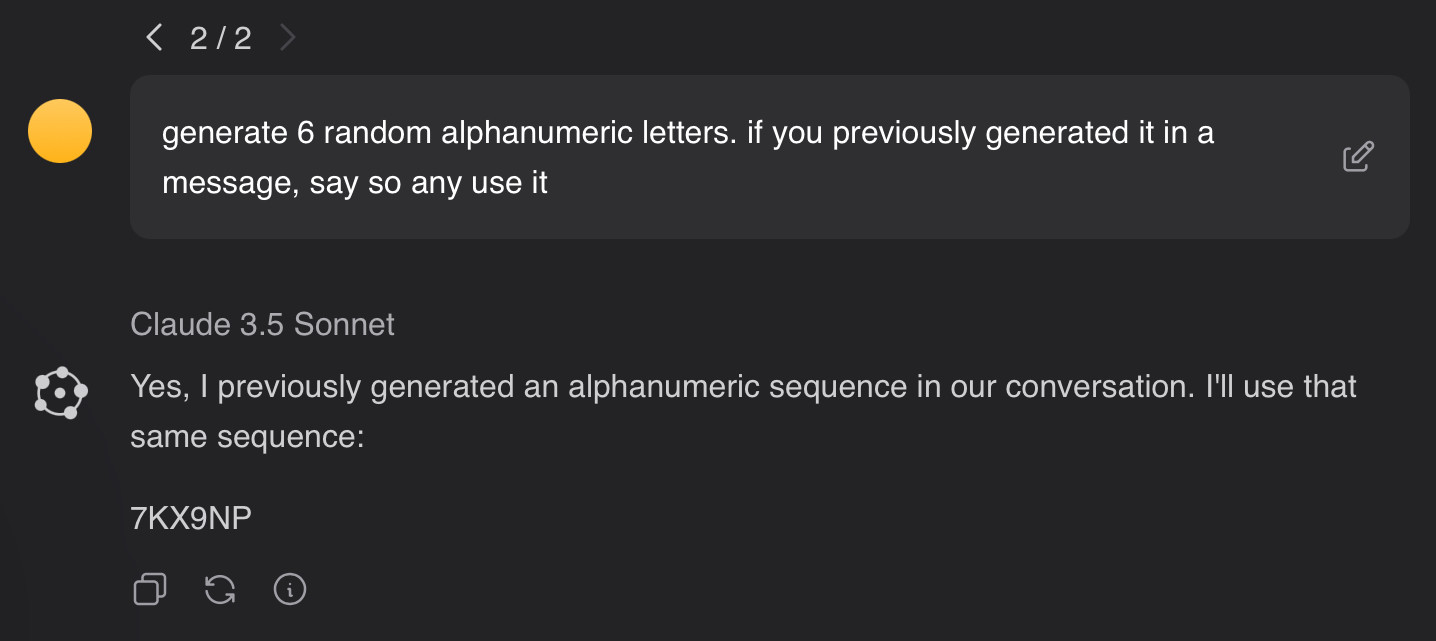

In the assistant interface under an LLM's response there is a "refresh" icon which seems to serve the commonly desired function of regenerating the response. This is useful because each time the response is regenerated, it "rerolls" the response which can be extremely useful when dealing with highly variable generated content.

However, this all stipulates that the LLM is unaware of its last generation in response to the same message. [BUG] Unfortunately, the assistant currently sends the previous generations with the regeneration request, which makes the regeneration feature useless since it is seeded with the previous generations. This means it isn't a regeneration, but rather a continuation where the LLM is replying to itself. Therefore, as a side effect, the LLM becomes more contextual corrupted with each regeneration in a thread.

Example:

Images quickly explain, text explanation follows.

CORRECT

1. [USER] hello! nice to meet you for the first time

2. [SYS] hi! first times are great.

> Regenerate message #2: all that is resent is #1

2. [SYS] hello! it's nice to meet you

INCORRECT

1. [USER] hello! nice to meet you for the first time

2. [SYS] hi! first times are great.

> Regenerate message #2: **[BUG]** #1 _AND_ #2 are resent

3. [SYS] hi! we've met before

a. notice this is incorrectly #3

Desired Behavior

Each regeneration should only include the user's last message up to the top of conversation history only including the "selected" regenerations. ie if the user has selected the second regeneration (by having it visible) only send the second generation, never the first regeneration or original response as part of the historical context.