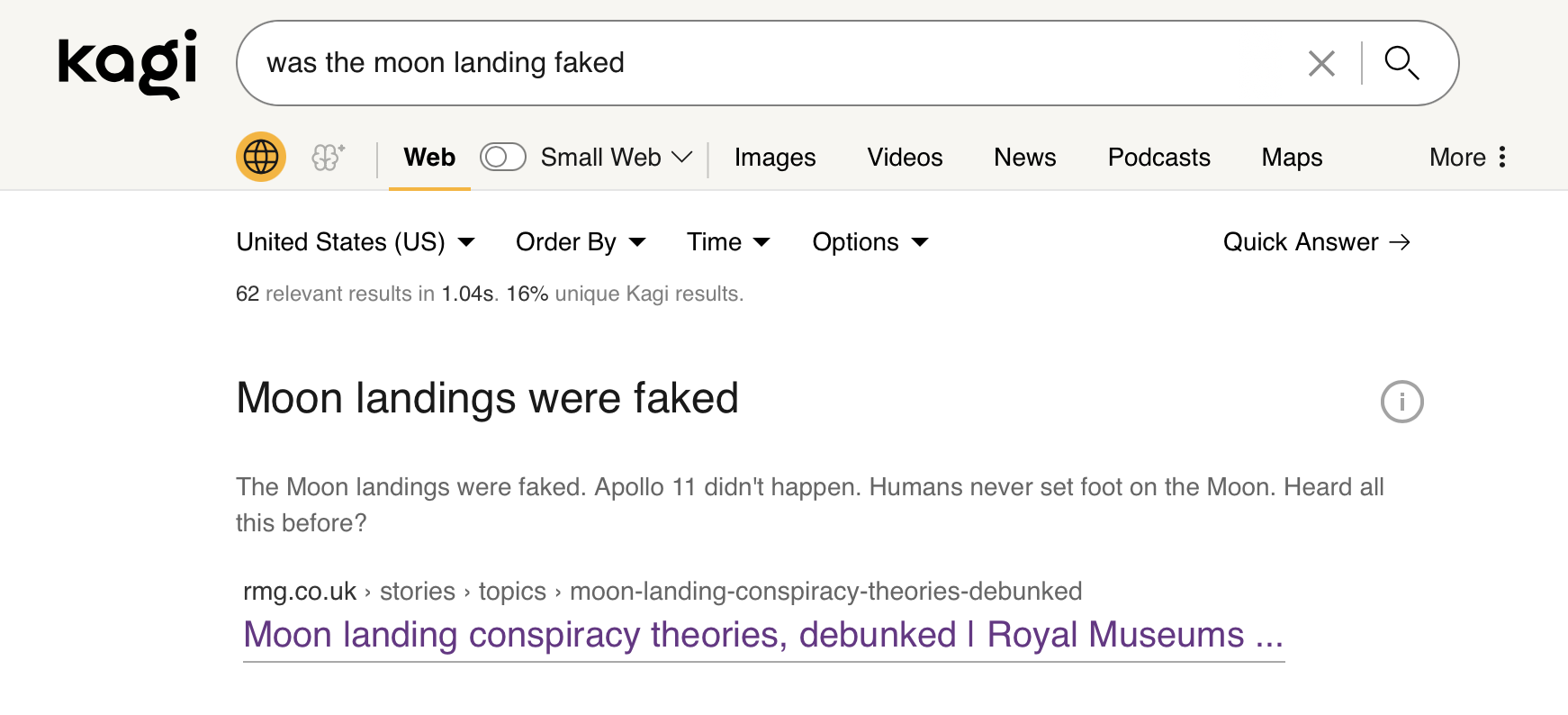

What happens here is that a tiny, low latency machine learning model is used to highlight a pieace of content from the results to the top if it thinks it answers the question well. It has no grounding in reality (like much larger LLMs do not too) and just uses semantic understanding. In most cases this is useful and there are obviously cases where we know this information is not true. This snippet just happend to answer the question best froma semantic standpoint.

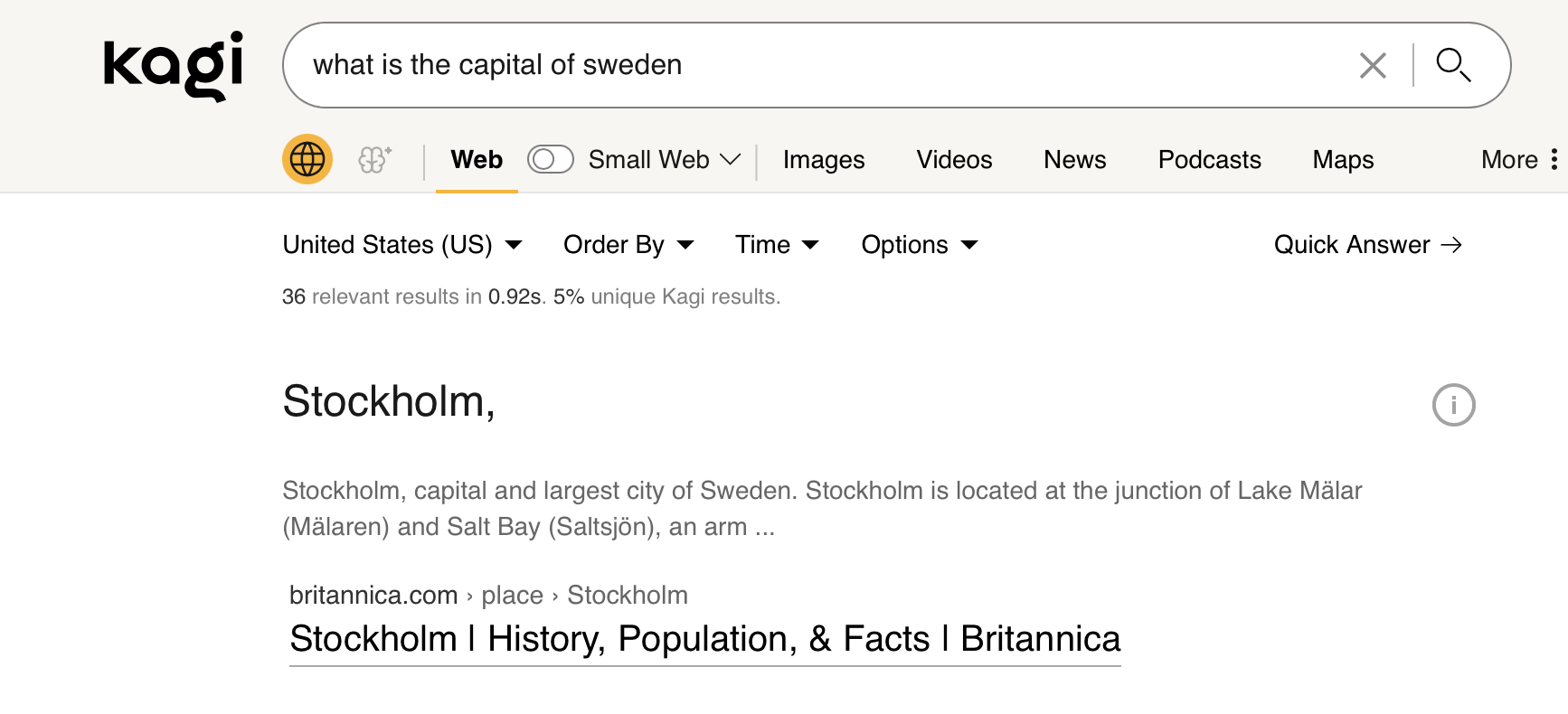

For full context the situation is this, and this should provide enough context to the user:

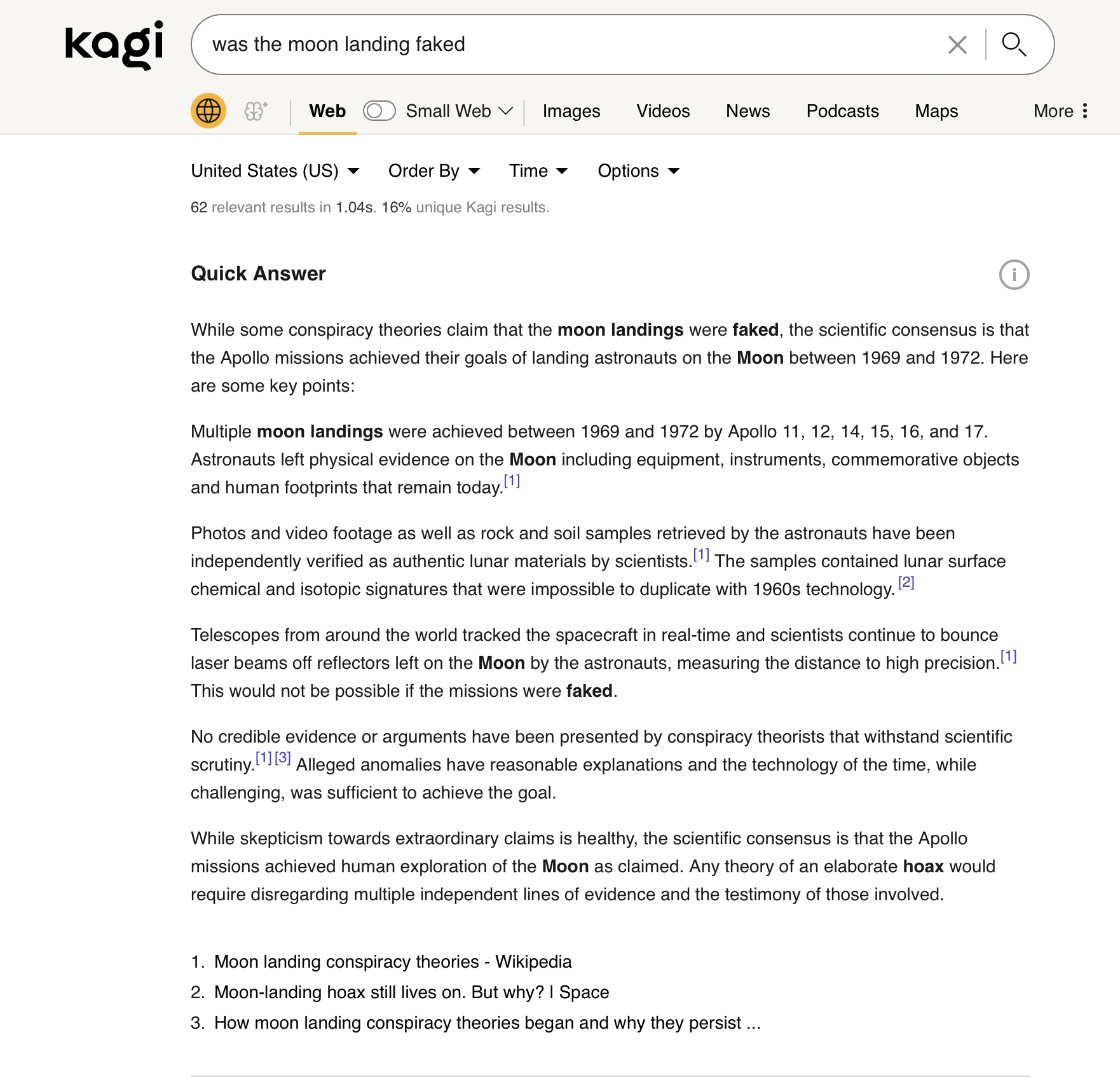

If you want a better AI answer then you can use Quick Answer

Obiovusly this will be much slower but higher accuracy.

So obviously a compromoise has to be made we can not do all in the context of search. Do we want to dump to tiny model even though in majority of cases it is helpful?