What happened? How did it happen? What are the steps to replicate the issue.

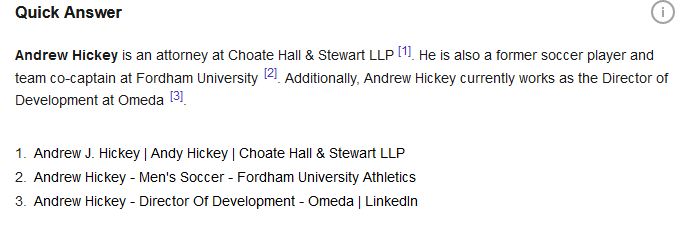

This is multiple people, who your LLM is asserting are all the same people. You're going to end up saying something libellous if you're not very very lucky.

What did you expect to happen? Describe the desired functionality.

That it can either keep people apart, or that it wouldn't talk about them at all. Making up answers is significantly worse than not answering.

At the very least this should have a clear warning above it, rather than people having to click the "i" to be told that it's the LLM-generated answer.