As the model tooltip suggests, it excels at translation tasks. While Kagi Translate works great, sometimes I want to specifically use Deepseek (v3.1 Terminus in Assistant), especially for very large blocks of text. Ironically, the model has reasoning enabled by default, causing various issues with the resulting output.

Despite prompt wrangling, translated text may or may not output in a couple of ways:

- Translated text outputs properly, after a brief (3 paragraphs-ish) thinking block

- Translated text outputs properly, thinking block magically absent

- Translated text, for some reason, is part of the thinking block without any reasoning within (also a pain to copy, as there is no way to copy reasoning text without mouse dragging)

- Thinking block would reason about the provided instruction prompt, over and over, repeating itself for all eternity

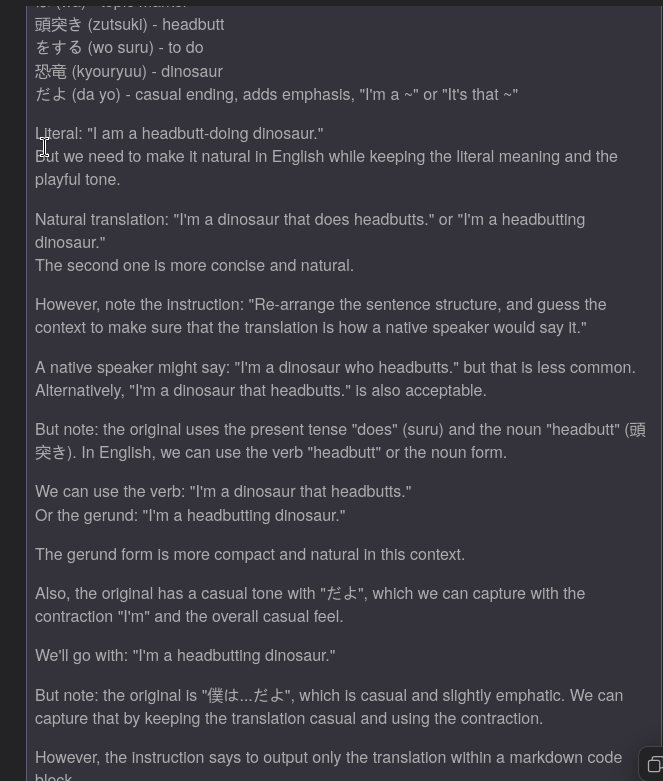

- Thinking block would reason about the provided text in exhaustive detail, as seen in the provided image. This could just waste some output credits, but on large blocks of text it could reason about each, individual, line, one by one until the thinking block stretches out to football field-length

So, yeah! It'd be great if there was a non-reasoning option, like Qwen, Kimi K2 and others have. Clicking the retry button until a usable output appears isn't exactly optimal, wasting a lot of AI credits.

As a sidenote the v3.2 exp model came out some time ago. Seems to be cheaper than v3.1 on OR, somehow.