Features

Two things I would like to see in the Assistant to help with context window management: the current context window usage (in tokens and percentage-used), and a slash command to summarize the current conversation (bringing the conversation length down, and making future messages in the thread cheaper as a result).

I should preface that I know Kagi automatically summarizes long messages in the background. I would also like the ability to trigger this manually, which I will discuss.

Show the context size, as well as the maximum for the currently selected model

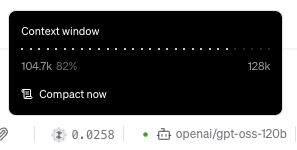

This is something I initially found in Google AI Studio and Codename Goose. It's not very complex: show the context window size of the selected window, and then the current conversation context size. For instance, here is how it's shown in Goose:

In the black tooltip above, the context window of the selected model, GPT-OSS, is shown as 128k tokens. There's also a progress bar showing that my current conversation consists of 104.7k tokens, or about 82% of the model's context window.

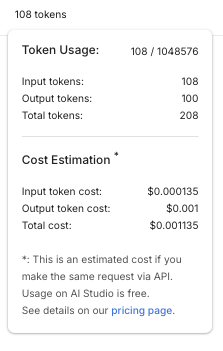

In AI Studio, this information is also shown in a tooltip near the top of the page:

Similar to Goose, the "token usage" is shown as the current conversation size out of the model's total context window. I don't personally find the differentiation between input and output tokens to be helpful here, since they are all counted as input for each new message, anyway (minus reasoning traces, generally).

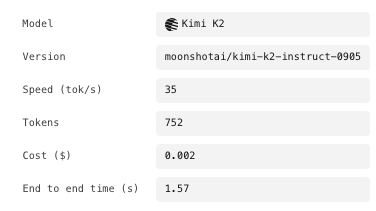

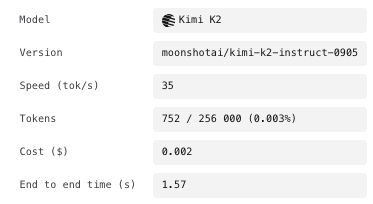

In Kagi Assistant, I imagine that this information could be shown inside the existing message tooltip, in the "tokens" field. Here is the current tooltip:

The only modification needed would be to be to show the context window size, and possibly a percentage used:

(probably don't need to go to three decimal places here, this is just an example)

Surface a "summarize" slash command

Kagi already summarizes long conversations to reduce the number of tokens sent. However, as far as I know, there's no documentation about when this process occurs. I think it would be helpful to have a manual /summarize command to trigger this process myself, so that I can keep costs down.

Goose has a button that does exactly this, "compact now", shown in the context tooltip:

All this button does is summarize the current conversation, so that it can be passed as a much more compact input in further messages in the thread, reducing input token count and cost. Kagi should already have the ability to do this, so I don't see any harm in surfacing a slash command for users to do this ourselves.

I don't think a button is needed, though it might feel clunky having to type and send a slash command by itself to accomplish this. I'm not sure exactly what the best interface element would be.

Use-case

Showing the context size/usage

Every model performs worse at longer conversation lengths, both in terms of recall and speed (see RULER). As such, I think the main use-case for this feature is to help me decide when to summarize the current conversation, or just start a new one, as I start to approach the limit for the selected model. It would also just be useful to have the context window size for each model available this way, so that I don't need to go and look them up myself.

Summarize slash command

I think I made a case for this already, but to reiterate: long conversations generally result in worse performance from the LLM, and result in higher costs per message. I believe it would be beneficial to have the ability to manually summarize/compact the current conversation to help mitigate these issues.

An example use-case would be programming. Often times, models get let's say 90% of the way there in the first shot, but need some steering/follow-up to fix remaining errors. After I get everything working, I might want to send additional queries based on this context, but I don't need all the previous context on troubleshooting the errors. So it would make sense in this case to use /summarize on the conversation so that I can keep the context about my code, but not the unnecessary tokens related to bugs.