Various LLM providers support prompt caching, which reduce costs on long threads by caching the earlier sections of the prompt. This appears to be in use on some of the models in Kagi Assistant, based on the reported tokens and costs:

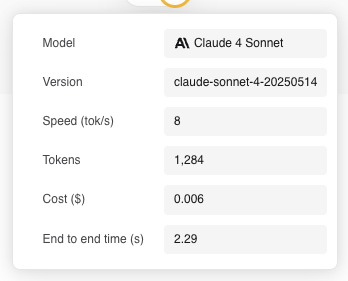

First message in conversation:

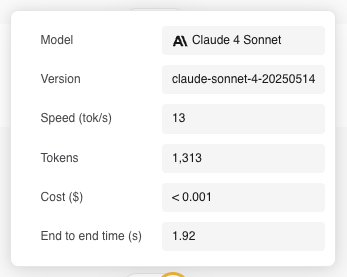

Second message in conversation:

However, there's nothing that indicates prompt caching is in effect aside from the cost. To make this more transparent, I propose that the response info box contain additional information about what is being cached. Currently it shows the total number of tokens, but in addition it could show either one or both of the number of cached tokens and the number of new tokens.

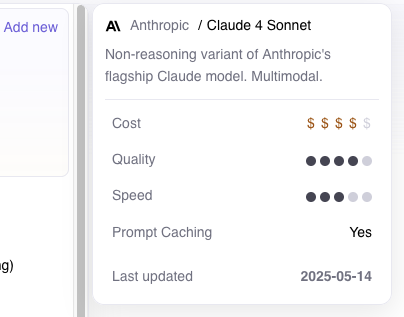

Moreover, it would be helpful to see this information in the model picker to help me in choosing an appropriate model. Unless all providers support prompt caching, which I don't think is true. A longer conversation might be cheaper on an expensive model that supports prompt caching compared to a cheaper model without.

I'm sure your designers can do better, but it could be as simple as:

It will just be there for users who want it, and it won't bother users who don't care about it.