So I realize Kagi's whole tack on gracefully degrading JavaScript, but it's by far the worst and slowest interface for interacting with an LLM for me. I get constant frame drops, the scrolling behavior is really janky (like if the LLM is writing a response that goes beyond the flop, I cannot scroll until it is done).

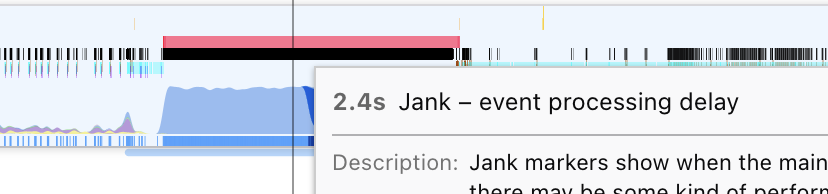

Above is a typical query I captured in Firefox. 2.4s of jank while responding! To compare, I had claude.ai just emit a long story and had a hard time finding any main thread blocking at at all -- I was able to incur like 200ms of jank sometimes when submitting a query from claude homepage.

I've been sort of frustrated with this for months, but have only just started to go and use Anthropic's UI because it feels so much smoother. I think the whole JS-only-as-enhancement tack only works if the experience is actually faster and leaner than the JS heavy alternatives.

Kagi assistant shouldn't cause my browser to drop frames or block the main thread for seconds while responding. It should scroll with the response as it's being emitted. It should not block scroll while emitting text.