As of now, when chatting with an assistant model, any markdown/latex is only rendered only when the model itself sends it. I believe it would be a good idea to also render markdown/latex when the user inputs it.

A good example of a website that does this is the Vercel AI SDK Demo. As shown in the comparison below, the demo page renders both the model's and the user's markdown (the Vercel demo doesn't seem to support latex).

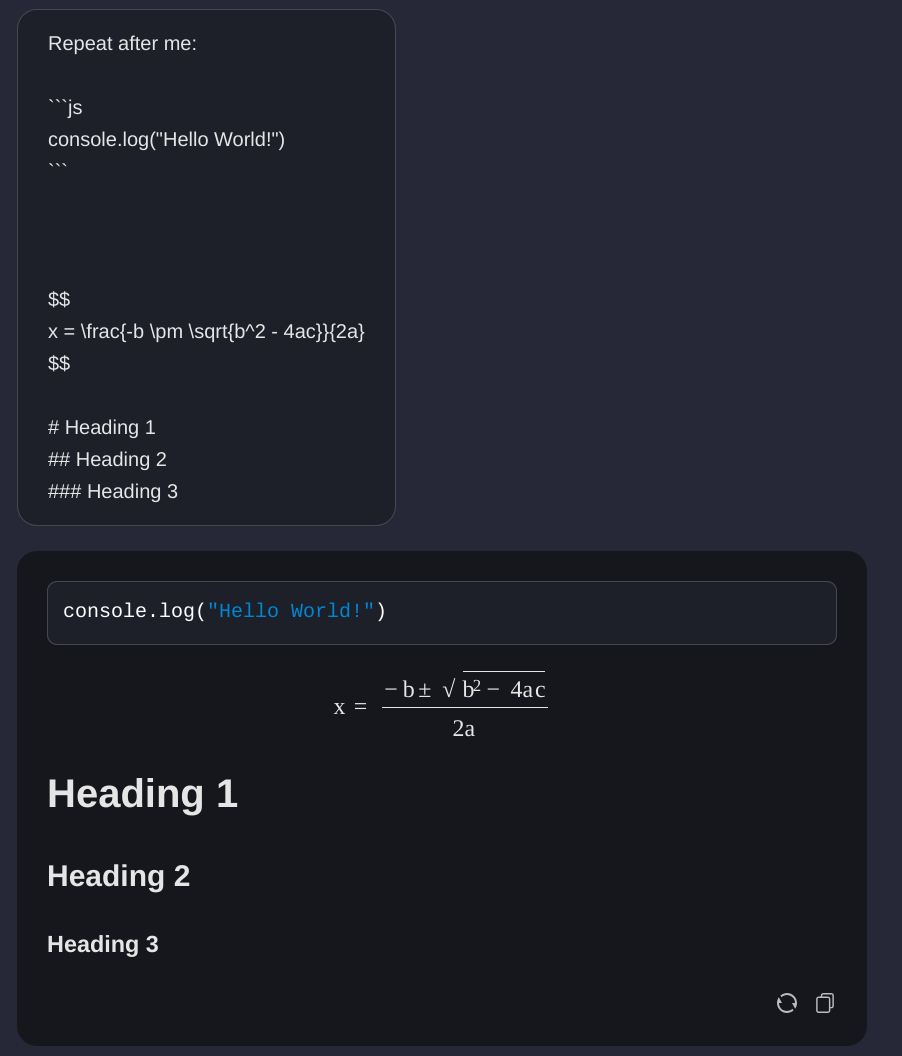

Kagi Assistant:

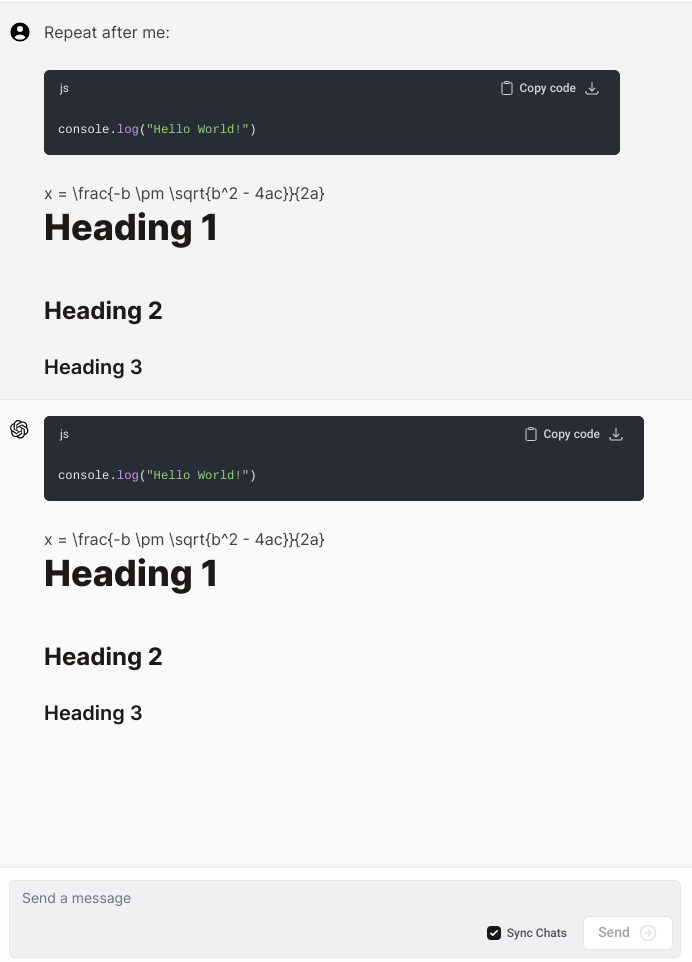

Vercel AI SDK Demo:

This would be useful primarily when talking to a model with things dealing with math. Right now, if I ask a model with help on a math problem and give it the problem in latex, I have to confirm that I didn't mess up when giving it the problem. It would be much nicer to just have it rendered just like messages from the model.

Rendering the markdown would possibly be less useful than latex, but it would still be nice to be able to see at least code you give a model with syntax highlighting.